Sentiment Analysis with TensorFlow 2 and Keras using Python

— Deep Learning, Keras, TensorFlow, NLP, Sentiment Analysis, Python — 3 min read

Share

TL;DR Learn how to preprocess text data using the Universal Sentence Encoder model. Build a model for sentiment analysis of hotel reviews.

This tutorial will show you how to develop a Deep Neural Network for text classification (sentiment analysis). We’ll skip most of the preprocessing using a pre-trained model that converts text into numeric vectors.

You’ll learn how to:

- Convert text to embedding vectors using the Universal Sentence Encoder model

- Build a hotel review Sentiment Analysis model

- Use the model to predict sentiment on unseen data

Run the complete notebook in your browser

The complete project on GitHub

Universal Sentence Encoder

Unfortunately, Neural Networks don’t understand text data. To deal with the issue, you must figure out a way to convert text into numbers. There are a variety of ways to solve the problem, but most well-performing models use Embeddings.

In the past, you had to do a lot of preprocessing - tokenization, stemming, remove punctuation, remove stop words, and more. Nowadays, pre-trained models offer built-in preprocessing. You might still go the manual route, but you can get a quick and dirty prototype with high accuracy by using libraries.

The Universal Sentence Encoder (USE) encodes sentences into embedding vectors. The model is freely available at TF Hub. It has great accuracy and supports multiple languages. Let’s have a look at how we can load the model:

1import tensorflow_hub as hub23use = hub.load("https://tfhub.dev/google/universal-sentence-encoder-multilingual-large/3")Next, let’s define two sentences that have a similar meaning:

1sent_1 = ["the location is great"]2sent_2 = ["amazing location"]Using the model is really simple:

1emb_1 = use(sent_1)2emb_2 = use(sent_2)What is the result?

1print(emb_1.shape)1TensorShape([1, 512])Each sentence you pass to the model is encoded as a vector with 512 elements. You can think of USE as a tool to compress any textual data into a vector of fixed size while preserving the similarity between sentences.

How can we calculate the similarity between two embeddings? We can use the inner product (the values are normalized):

1print(np.inner(emb_1, emb_2).flatten()[0])10.79254687Values closer to 1 indicate more similarity. So, those two are quite similar, indeed!

We’ll use the model for the pre-processing step. Note that you can use it for almost every NLP task out there, as long as the language you’re using is supported.

Hotel Reviews Data

The dataset is hosted on Kaggle and is provided by Jiashen Liu. It contains European hotel reviews that were scraped from Booking.com.

This dataset contains 515,000 customer reviews and scoring of 1493 luxury hotels across Europe. Meanwhile, the geographical location of hotels are also provided for further analysis.

Let’s load the data:

1df = pd.read_csv("Hotel_Reviews.csv", parse_dates=['Review_Date'])While the dataset is quite rich, we’re interested in the review text and review score. Let’s get those:

1df["review"] = df["Negative_Review"] + df["Positive_Review"]2df["review_type"] = df["Reviewer_Score"].apply(3 lambda x: "bad" if x < 7 else "good"4)56df = df[["review", "review_type"]]Any review with a score of 6 or below is marked as “bad”.

Exploration

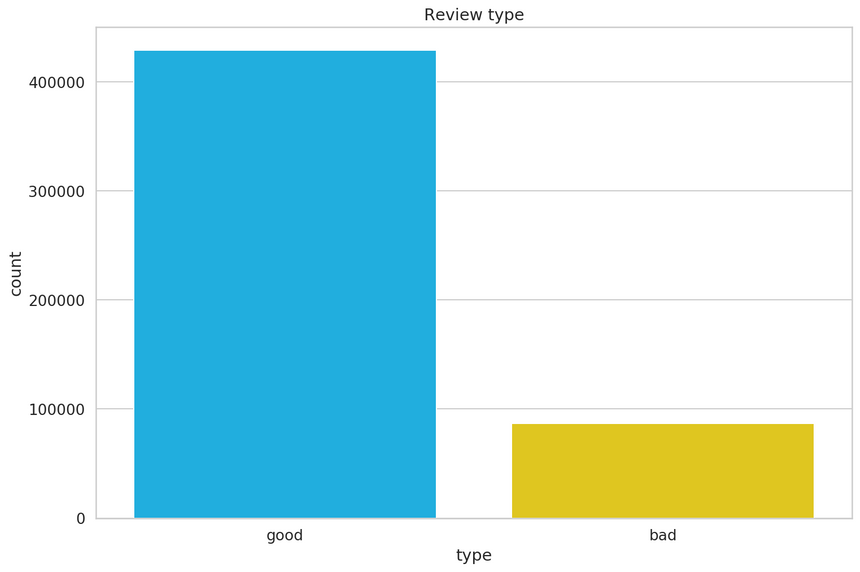

How many of each review type we have?

We have a severe imbalance in favor of good reviews. We’ll have to do something about that. However, let’s have a look at the most common words contained within the positive reviews:

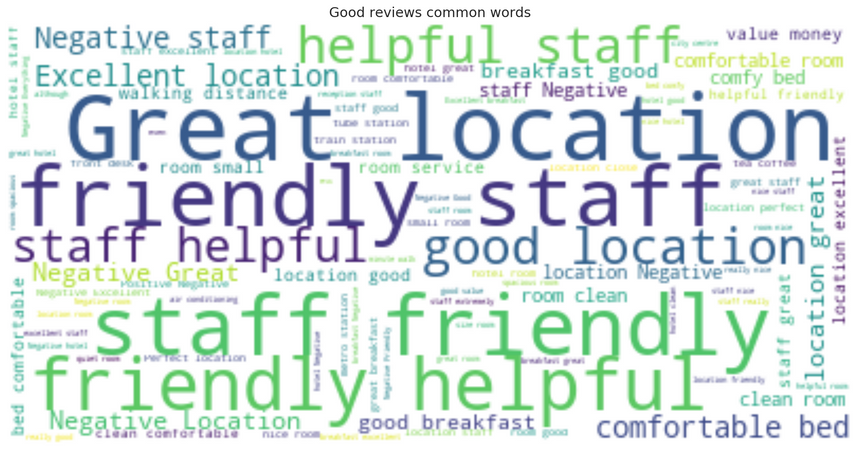

“Location, location, location” - pretty common saying in the tourism business. Staff friendliness seems like the second most common quality that is important for positive reviewers.

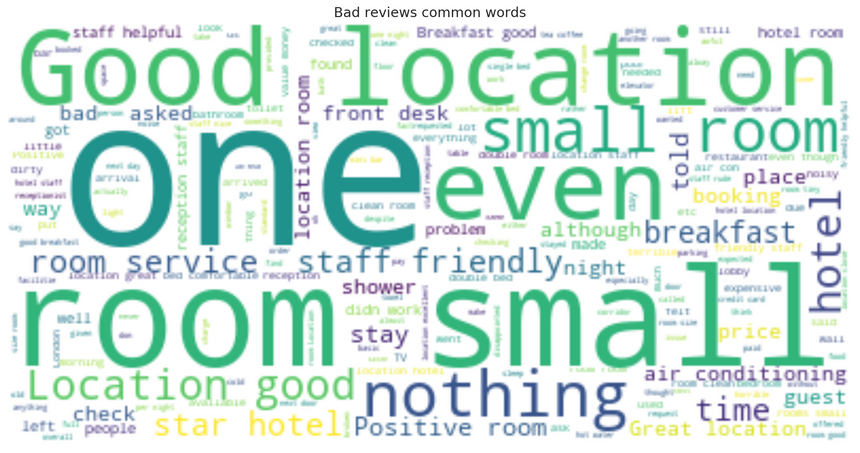

How about the bad reviews?

Much more diverse set of phrases. Note that “good location” is still present. Room qualities are important, too!

Preprocessing

We’ll deal with the review type imbalance by equating the number of good ones to that of the bad ones:

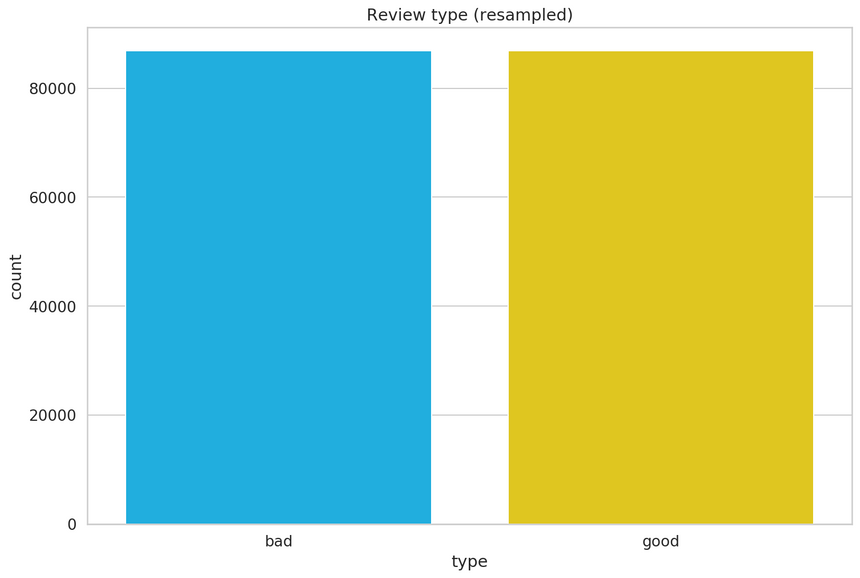

1good_df = good_reviews.sample(n=len(bad_reviews), random_state=RANDOM_SEED)2bad_df = bad_reviews3review_df = good_df.append(bad_df).reset_index(drop=True)4print(review_df.shape)1(173702, 2)Let’s have a look at the new review type distribution:

We have over 80k examples for each type. Next, let’s one-hot encode the review types:

1from sklearn.preprocessing import OneHotEncoder23type_one_hot = OneHotEncoder(sparse=False).fit_transform(4 review_df.review_type.to_numpy().reshape(-1, 1)5)We’ll split the data for training and test datasets:

1train_reviews, test_reviews, y_train, y_test =\2 train_test_split(3 review_df.review,4 type_one_hot,5 test_size=.1,6 random_state=RANDOM_SEED7 )Finally, we can convert the reviews to embedding vectors:

1X_train = []2for r in tqdm(train_reviews):3 emb = use(r)4 review_emb = tf.reshape(emb, [-1]).numpy()5 X_train.append(review_emb)67X_train = np.array(X_train)1X_test = []2for r in tqdm(test_reviews):3 emb = use(r)4 review_emb = tf.reshape(emb, [-1]).numpy()5 X_test.append(review_emb)67X_test = np.array(X_test)1print(X_train.shape, y_train.shape)1(156331, 512) (156331, 2)We have ~156k training examples and somewhat equal distribution of review types. How good can we predict review sentiment with that data?

Sentiment Analysis

Sentiment Analysis is a binary classification problem. Let’s use Keras to build a model:

1model = keras.Sequential()23model.add(4 keras.layers.Dense(5 units=256,6 input_shape=(X_train.shape[1], ),7 activation='relu'8 )9)10model.add(11 keras.layers.Dropout(rate=0.5)12)1314model.add(15 keras.layers.Dense(16 units=128,17 activation='relu'18 )19)20model.add(21 keras.layers.Dropout(rate=0.5)22)2324model.add(keras.layers.Dense(2, activation='softmax'))25model.compile(26 loss='categorical_crossentropy',27 optimizer=keras.optimizers.Adam(0.001),28 metrics=['accuracy']29)The model is composed of 2 fully-connected hidden layers. Dropout is used for regularization.

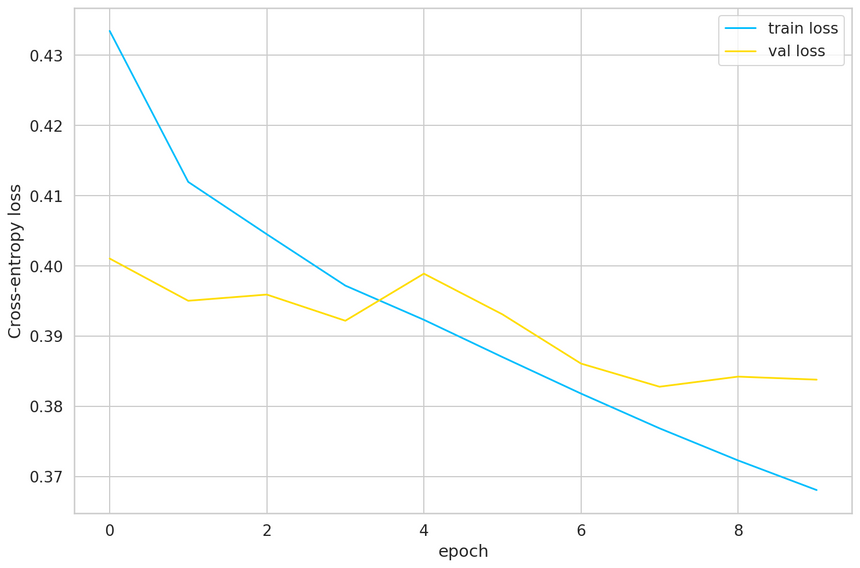

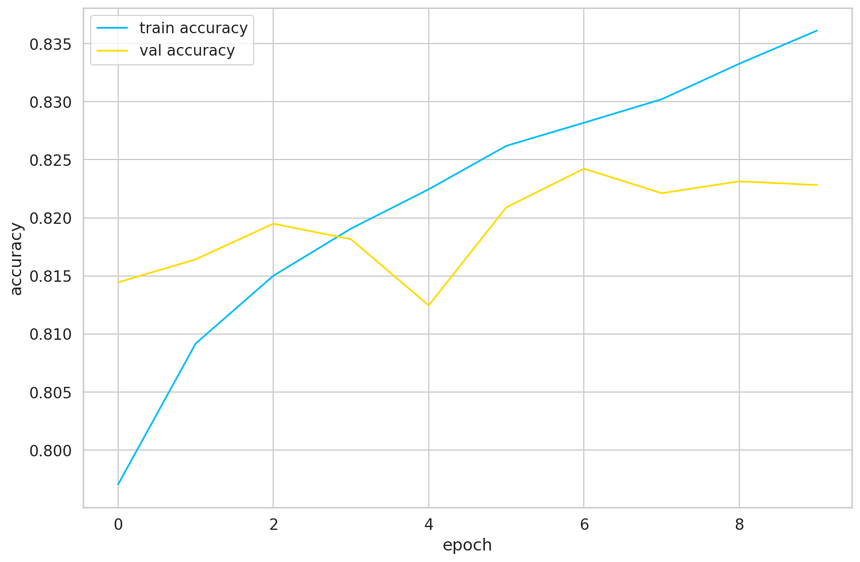

We’ll train for 10 epochs and use 10% of the data for validation:

1history = model.fit(2 X_train, y_train,3 epochs=10,4 batch_size=16,5 validation_split=0.1,6 verbose=1,7 shuffle=True8)

Our model is starting to overfit at about epoch 8, so we’ll not train for much longer. We got about 82% accuracy on the validation set. Let’s evaluate on the test set:

1model.evaluate(X_test, y_test)1[0.39665538506298975, 0.82044786]82% accuracy on the test set, too!

Predicting Sentiment

Let’s make some predictions:

1print(test_reviews.iloc[0])2print("Bad" if y_test[0][0] == 1 else "Good")Asked for late checkout and didnt get an answer then got a yes but had to pay 25 euros by noon they called to say sorry you have to leave in 1h knowing that i had a sick dog and an appointment next to the hotel Location staff

Bad

The prediction:

1y_pred = model.predict(X_test[:1])2print(y_pred)3"Bad" if np.argmax(y_pred) == 0 else "Good"1[[0.9274073 0.07259267]]2'Bad'This one is correct, let’s have a look at another one:

1print(test_reviews.iloc[1])2print("Bad" if y_test[1][0] == 1 else "Good")Don t really like modern hotels Had no character Bed was too hard Good location rooftop pool new hotel nice balcony nice breakfast

Good

1y_pred = model.predict(X_test[1:2])2print(y_pred)3"Bad" if np.argmax(y_pred) == 0 else "Good"1[[0.39992586 0.6000741 ]]2'Good'Conclusion

Well done! You can now build a Sentiment Analysis model with Keras. You can reuse the model and do any text classification task, too!

You learned how to:

- Convert text to embedding vectors using the Universal Sentence Encoder model

- Build a hotel review Sentiment Analysis model

- Use the model to predict sentiment on unseen data

Run the complete notebook in your browser

The complete project on GitHub

Can you use the Universal Sentence Encoder model for other tasks? Comment down below.

References

Share

Want to be a Machine Learning expert?

You'll never get spam from me