Training a Deep Neural Network with Backpropagation from Scratch in JavaScript

— Deep Learning, Machine Learning, Neural Network, JavaScript — 7 min read

Share

TL;DR Learn how to implement Gradient Descent for cases with multiple features in your dataset. Understand how Backpropagation work and use it together with Gradient Descent to train a Deep Neural Network.

You’re still trying to build a model that predicts the number of infected patients (with a novel respiratory virus) for tomorrow based on historical data. But now, you have more data. You’re ready to use a more powerful model - Deep Neural Network.

In this part, you’ll learn how to:

- Implement Generalized Gradient Descent and train a small Neural Network with it

- Learn how Backpropagation works and implement it from scratch using TensorFlow.js

- Train a Deep Neural Network using Backpropagation to predict the number of infected patients

If you’re thinking about skipping this part - DON’T! You should really understand how Backpropagation works!

In the previous part, you’ve implemented gradient descent for a single input. Can we do the same with multiple features?

A feature is a characteristic of each example in your dataset. For example - if you want to predict somebody`s age you might have features like height, weight and gender.

Generalized Gradient Descent

Let’s start with a review of the data that you’ll use to train our Neural Network:

1const DATA = [2 // infections, infected countries3 [2.0, 1.0],4 [5.0, 1.0],5 [7.0, 4.0],6 [12.0, 5.0],7]89const nextDayInfections = [5.0, 7.0, 12.0, 19.0]We have two features this time around - the number of daily infections and the number of countries with infected patients.

Let’s create the initial weight values. We’ll use 2 - one for each feature:

1var weights = [1.0, 0.5]How I come up with the values? I just spit out some numbers. Is this the best you can do? No! In fact, choosing good initial weight values is really important. We’ll look at a simple and good strategy to do it in the next section.

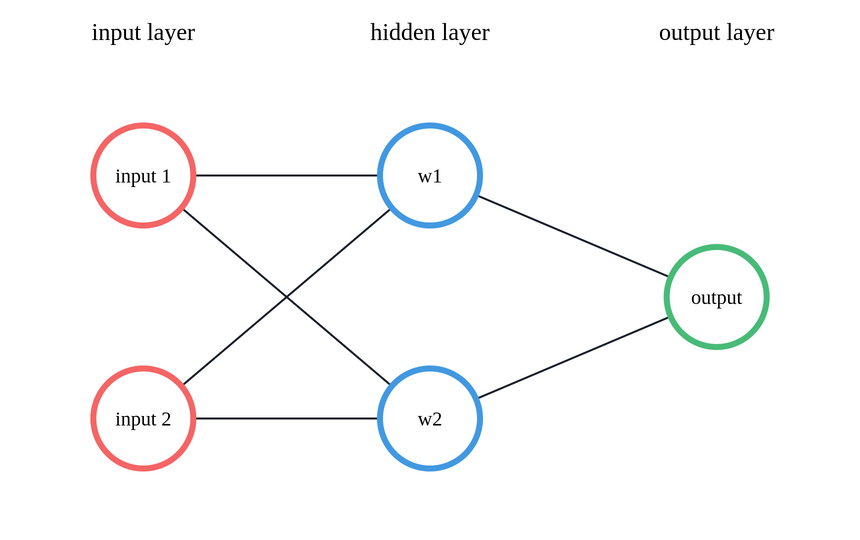

Your simple Neural Network looks like this:

You already know how to make predictions when you have multiple inputs - take the weighted sum of a data point and the weights:

1const weightedSum = (data, weights) => {2 var prediction = 03 for (const [i, weight] of weights.entries()) {4 prediction += data[i] * weight5 }6 return prediction7}89const neuralNet = (data, weights) => weightedSum(data, weights)We also need to adjust our method for updating the weights (since we have more than one). You need to apply the update rule for each feature and its associated weight:

1const updateWeights = (dataPoint, prediction, trueInfectedCount) => {2 for (const [i, d] of dataPoint.entries()) {3 const update = (prediction - trueInfectedCount) * d4 weights[i] -= ALPHA * update5 }6}Alright, you now have all the components to implement the generalized version of Gradient Descent:

1const ALPHA = 0.000223const DATA = [4 // infections, infected countries5 [2.0, 1.0],6 [5.0, 1.0],7 [7.0, 4.0],8 [12.0, 5.0],9]1011const nextDayInfections = [5.0, 7.0, 12.0, 19.0]1213var weights = [1.0, 0.5]1415const weightedSum = (data, weights) => {16 var prediction = 01718 for (const [i, weight] of weights.entries()) {19 prediction += data[i] * weight20 }2122 return prediction23}2425const updateWeights = (dataPoint, prediction, trueInfectedCount) => {26 for (const [i, d] of dataPoint.entries()) {27 const update = (prediction - trueInfectedCount) * d28 weights[i] -= ALPHA * update29 }30}3132const neuralNet = (data, weights) => weightedSum(data, weights)33const error = (prediction, trueValue) => (prediction - trueValue) ** 23435for (const i of Array(100).keys()) {36 var errors = 03738 console.log(`epoch ${i + 1}`)3940 for (const [j, dataPoint] of DATA.entries()) {41 const prediction = neuralNet(dataPoint, weights)42 const trueInfectedCount = nextDayInfections[j]4344 errors += error(prediction, trueInfectedCount)4546 updateWeights(dataPoint, prediction, trueInfectedCount)4748 console.log(`prediction: ${prediction}`)49 }5051 const epochError = errors / DATA.length52 console.log(`error: ${epochError}\n`)53}1epoch 12prediction: 2.53prediction: 5.5054999999999994prediction: 9.0206570999999985prediction: 14.595898832326error: 9.18903036523519478epoch 29prediction: 2.542057321212543510prediction: 5.59917106369317211prediction: 9.1707689213345712prediction: 14.83607230193277713error: 8.3366615730542831415epoch 316prediction: 2.581933100083191317prediction: 5.68797418383024818prediction: 9.31309746368284419prediction: 15.06378175451257220error: 7.5704296476362192122...2324epoch 9825prediction: 3.30714215540999826prediction: 7.28966401682300327prediction: 11.90613767249766228prediction: 19.19624777284523329error: 0.74924906237570353031epoch 9932prediction: 3.30739741971408433prediction: 7.290062394719682534prediction: 11.90710681169022735prediction: 19.1975930602742136error: 0.74917796238098543738epoch 10039prediction: 3.307640260754310740prediction: 7.2904331187787341prediction: 11.9080316042193642prediction: 19.19886741341267843error: 0.7491098293607978The error is gradually (pun intended) reducing - great! We iterate over each data point, make a prediction, calculate the error and adjust the weights.

We did get the job done but that code took an awful lot lines to write. Let’s look at the same implementation using TensorFlow.js:

1import * as tf from "@tensorflow/tfjs"23const ALPHA = 0.000245const DATA = tf.tensor([6 // infections, infected countries7 [2.0, 1.0],8 [5.0, 1.0],9 [7.0, 4.0],10 [12.0, 5.0],11])1213const nextDayInfections = tf.tensor([5.0, 7.0, 12.0, 19.0])1415var weights = tf.tensor([1.0, 0.5])1617const neuralNet = (data, weights) => data.dot(weights)18const error = (prediction, trueValue) => tf.square(prediction.sub(trueValue))1920for (const i of Array(100).keys()) {21 const prediction = neuralNet(DATA, weights)2223 const epochError = tf.mean(error(prediction, nextDayInfections))2425 const loss = prediction.sub(nextDayInfections)2627 const change = loss.dot(DATA).mul(ALPHA)2829 weights = weights.sub(change)3031 console.log(`epoch ${i + 1}`)3233 for (const n of prediction.dataSync()) {34 console.log(`prediction: ${n.toFixed(2)}`)35 }3637 console.log(`error: ${epochError.dataSync()}\n`)38}1epoch 12prediction: 2.503prediction: 5.504prediction: 9.005prediction: 14.506error: 9.437578epoch 29prediction: 2.5410prediction: 5.6011prediction: 9.1512prediction: 14.7513error: 8.5476827621459961415epoch 316prediction: 2.5817prediction: 5.6918prediction: 9.3019prediction: 14.9820error: 7.749018192291262122...2324epoch 9825prediction: 3.3126prediction: 7.2927prediction: 11.9128prediction: 19.2029error: 0.74793177843093873031epoch 9932prediction: 3.3133prediction: 7.2934prediction: 11.9135prediction: 19.2036error: 0.74786663055419923738epoch 10039prediction: 3.3140prediction: 7.2941prediction: 11.9142prediction: 19.2043error: 0.7478042244911194Much more succint! Calculating the weighted sum and updating the weights is done by using a couple of Tensor methods. The results are pretty much the same.

You migh’ve noticed something else here. Why don’t we iterate over the examples of our dataset? TensorFlow.js is utilizing a technique called vectorization:

“Vectorization” (simplified) is the process of rewriting a loop so that instead of processing a single element of an array N times, it processes (say) 4 elements of the array simultaneously N/4 times. Stephen Canon

So, TensorFlow.js makes things faster and easier to read! There are a lot of frameworks that can do that for you, but in JavaScript land TensorFlow.js is king! So, TensorFlow.js makes things faster and easier to read!

Backpropagation

Backpropagation is an algorithm for training Neural Networks. Given the current error, Backpropagation figures out how much each weight contributes to this error and the amount that needs to be changed (using gradients). It works with arbitrarily complex Neural Nets!

Backpropagation doesn’t update (optimize) the weights! For that, you need optimization algorithms such as Gradient Descent.

Alright, but we did pretty well without Backpropagation so far? Why use it? Well, you’ve been using Backpropagation all along. Your Neural Network was just… tiny!

Training a Deep Neural Network with Backpropagation

In recent years, Deep Neural Networks beat pretty much every other model on various Machine Learning tasks. They are like the crazy hottie you’re so much attracted to - can give you immense pleasure but can also make your life miserable if left unchecked.

They are extremely flexible models, but so much choice comes with a price. Sometimes, you don’t know which option is best. A variety of tools, libraries, architectures, optimizers, bizarre ideas has exploded recently. How can you choose?

I’ll use the Lindy effect and show you concepts that stood the test of time. Later on, you’ll build a complete Deep Neural Network and train it with Backpropagation!

The Lindy effect is a theory that the future life expectancy of some non-perishable things like a technology or an idea is proportional to their current age, so that every additional period of survival implies a longer remaining life expectancy. Lindy effect - Wikipedia

Deep Neural Networks

The intuition behind Artificial Neural Network (ANNs) models can be explained by squinting your eyes and looking at how our brains work and their structure. Our brains contain neurons (weights) and connections (synapses).

In ANNs, weights are arranged in horizontal structures known as layers. Layers are stacked over each other. But why?

Neural Networks contain input layers (where the data gets fed), hidden layer(s) (parameters (weights) that are learned during training), and an output layer (the predicted value(s)). Neural Networks with 2 or more (hidden) layers are called Deep Neural Networks

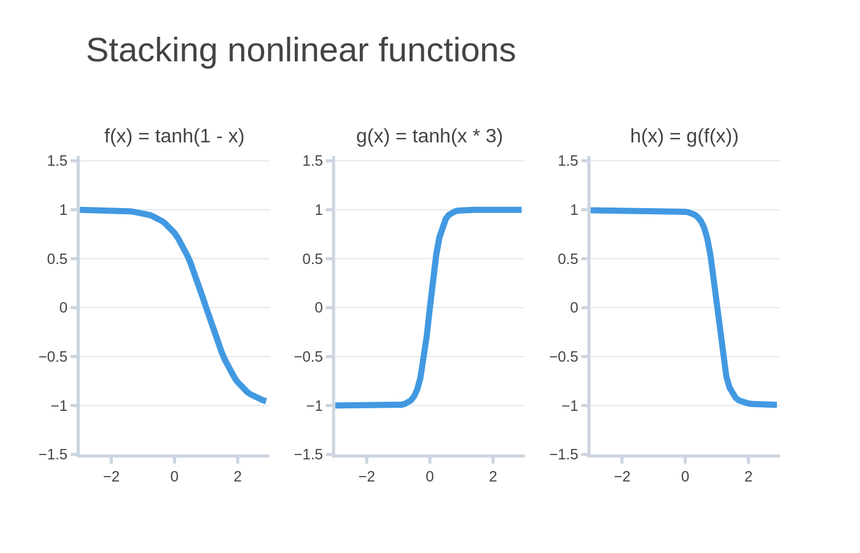

Neural Networks are very good nonlinear learners - they can approximate functions that are not representable with a line. This is possible by stacking layers. In the real world - not very many problems can be solved by drawing a line (e.g. predicting car prices by a linear relationship will not give you a good result - some cars are much more expensive than your linear model expects). How can they do that?

Activation functions

Introducing nonlinearity in your Neural Network is achieved by adding activation functions to each layer’s output.

Building a Neural Network with multiple layers without adding activation functions between them is equivalent to building a Neural Network with a single layer.

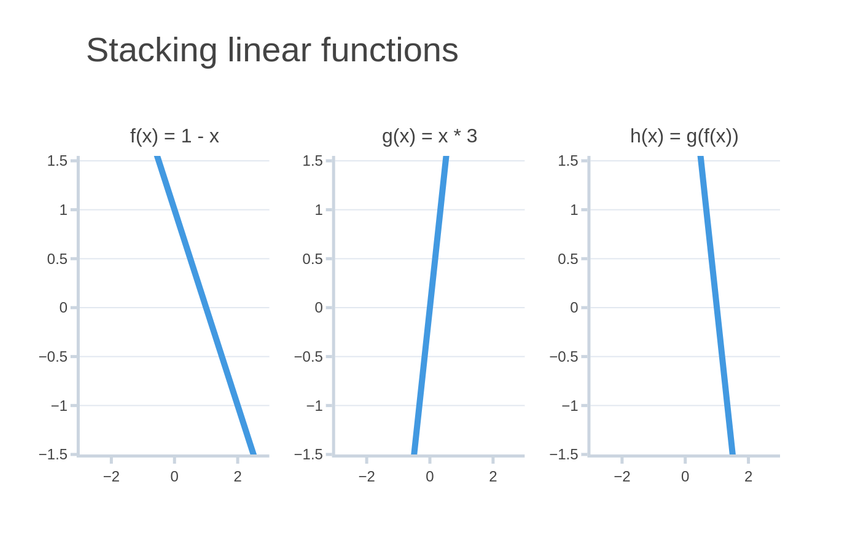

Let’s try to stack (call one with the result from another) two linear functions:

f(x)=1−xand

g(x)=x×3Combining those two gives you a new function:

h(x)=g(f(x))=(1−x)×3=3−3xThe result is still a linear function. Have a look:

And here’s a preview of what stacking nonlinear functions looks like:

Alright, those activation functions sound really magical. They must be hard to understand and implement, right? Not at all!

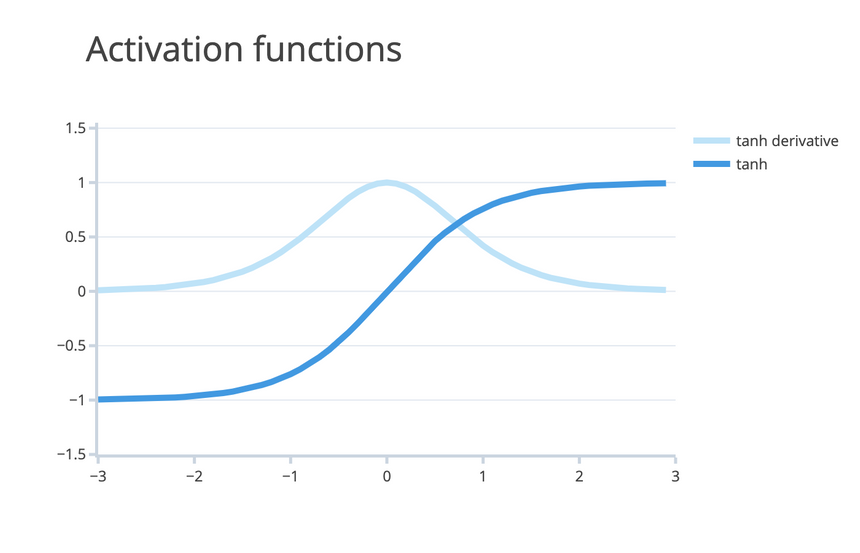

Let’s look at one that you might already be familiar with - the hyperbolic tangent:

tanh(x)=cosh(x)sinh(x)And here is the TensorFlow.js version:

1const tanh = x => tf.sinh(x).div(tf.cosh(x))We’re also going to use the first derivative of tanh. Here is the definition (taken from a table of derivates):

tanh′(x)=1−tanh2(x)The implementation creates a tensor of ones (with the correct shape) and substracts the squared tanh from it:

1const tanhPrime = x => tf.ones(x.shape).sub(tf.square(tanh(x)))If you are anything like me, this will not give you a clear picture of what is happening. So here is one that should do it:

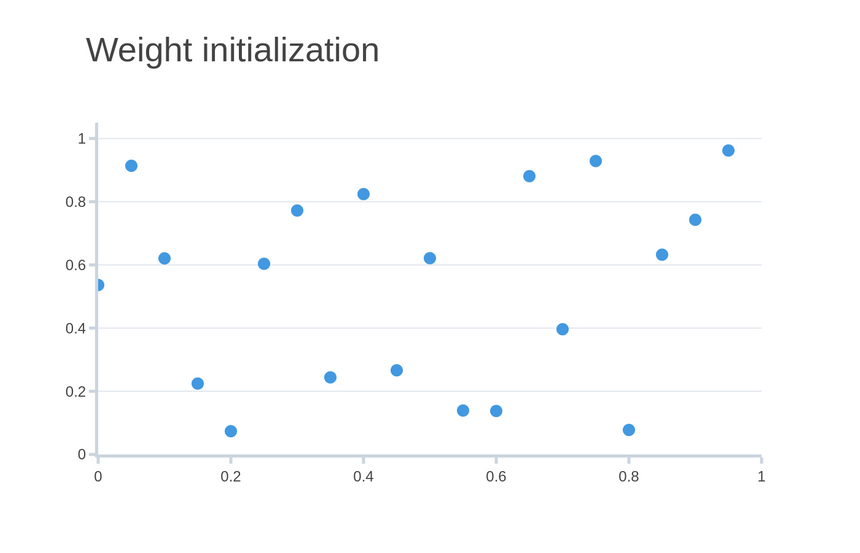

Weight initialization

Thus far, the weights we’ve been using have been somewhat of magic numbers. What initial values are good for our weights?

For quite a while, Neural Networks and other models “weren’t working” during the 80s and 90s. Almost all hopes were lost. Those periods are known as AI Winters. What were the reasons?

For one, the hardware wasn’t quite there - you simply couldn’t process and store large amounts of high-quality images and texts. Also, the algorithms weren’t quite effective! Weight initialization was also a problem - what values should we use?

Turns out (found with mostly empirical testing) weights must be initialized with small random numbers. This is mostly due to the quirks of using Gradient Descent - starting from random locations in high dimensional spaces lets you explore more possibilities for good weights (low errors) as the algorithm does its magic.

In practice, we can use values from a uniform distribution in TensorFlow.js like so:

1const weights = tf.randomUniform([100], 0, 1)This generates 100 values in the range between 0 and 1. Here is how they might look:

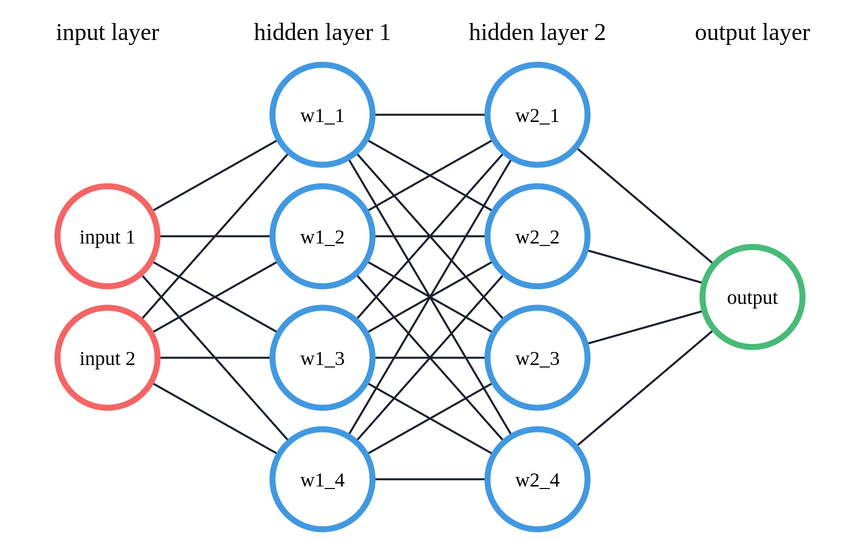

Architecture

We’ll train a Deep Neural Network with 2 layers. Let’s start by converting the data to Tensors:

1const DATA = tf.tensor([2 // infections, infected countries3 [2.0, 1.0],4 [5.0, 1.0],5 [7.0, 4.0],6 [12.0, 5.0],7])89const nextDayInfections = tf.expandDims(tf.tensor([5.0, 7.0, 12.0, 19.0]), 1)Note that we convert the next day infections to 2D tensor (using expandDims()). This will make the code much more readable.

We need two sets of weights (for each hidden layer):

1const HIDDEN_SIZE = 423var weights1 = tf.randomUniform([2, HIDDEN_SIZE], 0, 1)4var weights2 = tf.randomUniform([HIDDEN_SIZE, 1], 0, 1)Here’s how your Deep Neural Net looks like:

Our input layer has a size of 2 - infections and infected countries. This gets connected to the first hidden layer with 4 neurons. The second hidden layer also contains 4 neurons. It gets connected to the output layer with a size of 1 (the number of predicted infections for tomorrow).

Finally, we can run the Backpropagation algorithm:

1const layer1 = tanh(DATA.dot(weights1))2const layer2 = layer1.dot(weights2)34const layer2Delta = layer2.sub(nextDayInfections)5const layer1Delta = layer2Delta.dot(weights2.transpose()).mul(tanhPrime(layer1))67weights2 = weights2.sub(layer1.transpose().dot(layer2Delta).mul(ALPHA))8weights1 = weights1.sub(DATA.transpose().dot(layer1Delta).mul(ALPHA))We start by taking the weighted sum of the data and the weights of the first hidden layer. The result gets passed to our activation function tanh(). This is the prediction of the first hidden layer.

The prediction of the next hidden layer is simply the dot product (weighted sum) of the previous layer with the weights for the second hidden layer.

Next, we need to calculate how much each weight should be changed. We can do that by identifying how much each weight contributed to the error.

For the second hidden layer, we’re using the difference between the prediction and real values. That’s it!

The change of the first hidden layer is computed by taking the previous delta and multiplying it with the weights of the layer. Finally, we do element-wise multiplication with the first derivative of tanh to apply the rate of change given by the activation function.

The weight update rules are pretty much identical, except that we apply transpose() to convert the tensors into correct shapes so that operations can be applied correctly.

The weights of the second hidden layer get updates based on the weighted sum of the change (delta) and the prediction from the first hidden layer. The first hidden layer gets updated by using the original dataset.

Here is the complete example, running for 20 epochs:

1import * as tf from "@tensorflow/tfjs"23const ALPHA = 0.0145const HIDDEN_SIZE = 467const DATA = tf.tensor([8 // infections, infected countries9 [2.0, 1.0],10 [5.0, 1.0],11 [7.0, 4.0],12 [12.0, 5.0],13])1415const nextDayInfections = tf.expandDims(tf.tensor([5.0, 7.0, 12.0, 19.0]), 1)1617var weights1 = tf.randomUniform([2, HIDDEN_SIZE], 0, 1)18var weights2 = tf.randomUniform([HIDDEN_SIZE, 1], 0, 1)1920const tanh = x => tf.sinh(x).div(tf.cosh(x))2122const tanhPrime = x => tf.ones(x.shape).sub(tf.square(tanh(x)))2324for (const i of Array(20).keys()) {25 const layer1 = tanh(DATA.dot(weights1))26 const layer2 = layer1.dot(weights2)2728 const layer2Delta = layer2.sub(nextDayInfections)29 const layer1Delta = layer2Delta30 .dot(weights2.transpose())31 .mul(tanhPrime(layer1))3233 weights2 = weights2.sub(layer1.transpose().dot(layer2Delta).mul(ALPHA))3435 weights1 = weights1.sub(DATA.transpose().dot(layer1Delta).mul(ALPHA))3637 const currentError = tf.mean(tf.square(layer2.sub(nextDayInfections)))38 console.log(`epoch ${i + 1} error: ${currentError.dataSync()}`)39}1epoch 1 error: 125.688461303710942epoch 2 error: 97.801040649414063epoch 3 error: 77.511054992675784epoch 4 error: 63.276393890380865...6epoch 17 error: 29.55383300781257epoch 18 error: 29.445983886718758epoch 19 error: 29.369886398315439epoch 20 error: 29.316192626953125Interestingly, that error is surely reducing but not hitting quite as low as before. Can you find out why?

Summary

Well, you surely learned a lot about how to train Neural Networks. The Backpropagation algorithm has been around for a long time and seems to be working quite well in practice. This part helps you understand how exactly is doing its thing.

In this part, you learned how to:

- Implement Generalized Gradient Descent and train a small Neural Network with it

- Learn how Backpropagation works and implement it from scratch using TensorFlow.js

- Train a Deep Neural Network using Backpropagation to predict the number of infected patients

So, now you’re ready to create infinitely complex Deep Neural Nets that solve any problem, right? Of course not!

If you’re anything like me, you want to try out different alphas, activation functions, and architectures. And you might cringe when you think that you need to deal with derivatives of funky functions. Fortunately, TensorFlow.js gots you covered - it makes things really simple and hides most of the complexities associated with Deep Learning.

How easy could it be? I’ll show you next!

References

Share

Want to be a Machine Learning expert?

You'll never get spam from me