Hacker's Guide to Hyperparameter Tuning

— Neural Networks, Deep Learning, TensorFlow, Machine Learning, Python — 6 min read

Share

TL;DR Learn how to search for good Hyperparameter values using Keras Tuner in your Keras and scikit-learn models

Hyperparameter tuning refers to the process of searching for the best subset of hyperparameter values in some predefined space. For us mere mortals, that means - should I use a learning rate of 0.001 or 0.0001?

In particular, tuning Deep Neural Networks is notoriously hard (that’s what she said?). Choosing the number of layers, neurons, type of activation function(s), optimizer, and learning rate are just some of the options. Unfortunately, you don’t really know which choices are the ones that matter, in advance.

On top of that, those models can be slow to train. Running many experiments in parallel might be a good option. Still, you need a lot of computational resources to do that on practical datasets.

Here are some of the ways that Hyperparameter tuning can help you:

- Better accuracy on the test set

- Reduced number of parameters

- Reduced number of layers

- Faster inference speed

None of these benefits are guaranteed, but in practice, some combination often is true.

Run the complete code in your browser

What is a Hyperparameter?

Hyperparameters are never learned, but set by you (or your algorithm) and govern the whole training process. You can think of Hyperparameters as configuration variables you set when running some software. Common examples of Hyperparameters are learning rate, optimizer type, activation function, dropout rate.

Adjusting/finding good values is really slow. You have to wait for the whole training process to complete, evaluate the results and adjust the value(s). Unfortunately, you might have to complete the whole search process when your data or model changes.

Don’t be a hero! Use Hyperparameters from papers or other peers when your datasets and models are similar. At least, you can use those as a starting point.

When to do Hyperparameter Tuning?

Changing anything inside your model or data affects the results from previous Hyperparameter searches. So, you want to defer the search as much as possible.

Three things need to be in place, before starting the search:

- You have intimate knowledge of your data

- You have an end-to-end framework/skeleton for running experiments

- You have a systematic way to record and check the results of the searches (coming up next)

Hyperparameter tuning can give you another 5-15% accuracy on the test data. Well worth it, if you have the computational resources to find a good set of parameters.

Common strategies

There are two common ways to search for hyperparameters:

Improving one model

This option suggest that you use a single model and try to improve it over time (days, weeks or even months). Each time you try to fiddle with the parameters so you get an improvement on your validation set.

This option is used when your dataset is very large and you lack computational resources to use the next one. (Grad student optimization also falls within this category)

Training many models

You train many models in parallel using different settings for the hyperparameters. This option is computationally demanding and can make your code messy.

Luckily, we’ll use the Keras Tuner to make the process more managable.

Finding Hyperparameters

We’re searching for multiple parameters. It might sound tempting to try out every possible combination. Grid search is a good option for that.

However, you might not want to do that. Random search is a better alternative. It’s just that Neural Networks seem much more sensitive to changes in one parameter than another.

Another approach is to use Bayesian Optimization. This method builds a function that estimates how good your model is going to be with a certain choice of hyperparameters.

Both approaches are implemented in Keras Tuner. How can we use them?

Remember to occasionaly re-evaluate your hyperparameters. Over time, you might’ve improved your algorithm, your dataset might have changed or the hardware/software has changed. Because of those changes the best settings for the hyperparameters can get stale and need to be re-evaluated.

Data

We’ll use the Titanic survivor data from Kaggle:

The competition is simple: use machine learning to create a model that predicts which passengers survived the Titanic shipwreck.

Let’s load and take a look at the training data:

1!gdown --id 1uWHjZ3y9XZKpcJ4fkSwjQJ-VDbZS-7xi --output titanic.csv1df = pd.read_csv('titanic.csv')Exploration

Let’s take a quick look at the data and try to understand what it contains:

1df.shape1(891, 12)We have 12 columns with 891 rows. Let’s see what the columns are:

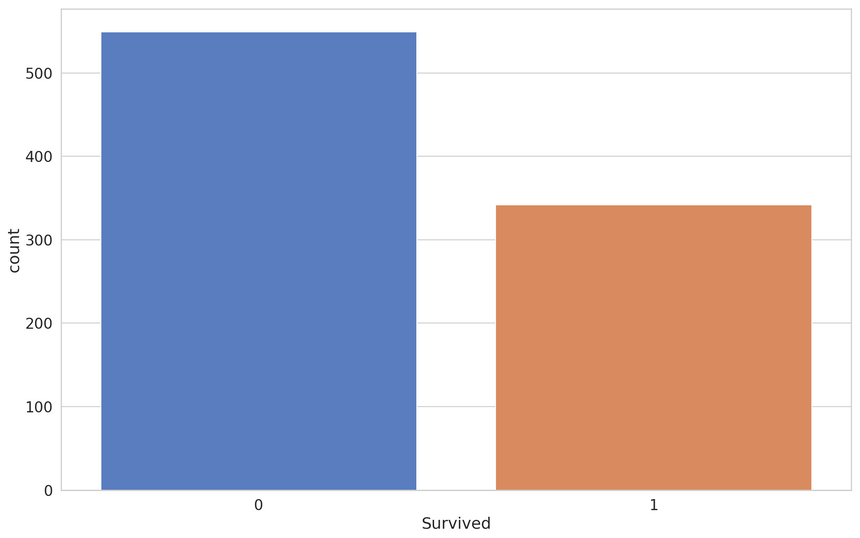

1df.columns1Index(['PassengerId', 'Survived', 'Pclass', 'Name', 'Sex', 'Age', 'SibSp',2 'Parch', 'Ticket', 'Fare', 'Cabin', 'Embarked'],3 dtype='object')All of our models are going to predict the value of the Survived column. Let’s have a look its distribution:

While the classes are not well balanced, we’ll use the dataset as-is. Read the Practical Guide to Handling Imbalanced Datasets to learn about some ways to solve this issue.

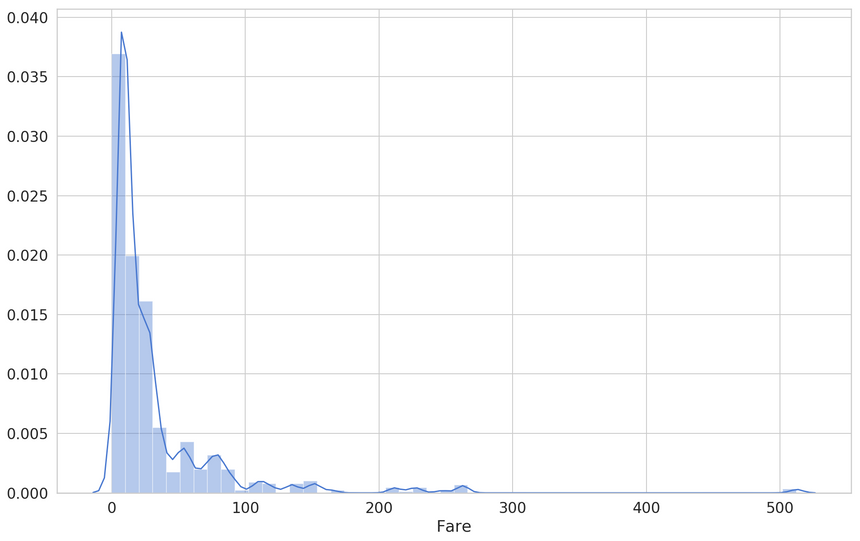

Another one that might interest you is the Fare (the price of the ticket):

About 80% of the tickets are priced below 30 USD. Do we have missing data?

Preprocessing

1missing = df.isnull().sum()2missing[missing > 0].sort_values(ascending=False)1Cabin 6872Age 1773Embarked 2Yes, we have a lot of cabin data missing. Luckily, we won’t need that feature for our model. Let’s drop it along with other columns:

1df = df.drop(['Cabin', 'Name', 'Ticket', 'PassengerId'], axis=1)We’re left with 8 columns (including Survived). We still have to do something with the missing Age and Embarked columns. Let’s handle those:

1df['Age'] = df['Age'].fillna(df['Age'].mean())2df['Embarked'] = df['Embarked'].fillna(df['Embarked'].mode()[0])The missing Age values are replaced with the mean value. Missing Embarked values are replaced with the most common one.

Now that our dataset has no missing values, we need preprocess the categorical features:

1df = pd.get_dummies(df, columns=['Sex', 'Embarked', 'Pclass'])We can start with building and optimizing our models. What do we need?

Keras Tuner

Keras Tuner is a new library (still in beta) that promises:

Hyperparameter tuning for humans

Sounds cool. Let’s have a closer look.

There are two main requirements for searching Hyperparameters with Keras Tuner:

- Create a model building function that specifies possible Hyperparameter values

- Create and configure a Tuner to use for the search process

The version of Keras Tuner we’re using in this writing is 7f6b00f45c6e0b0debaf183fa5f9dcef824fb02f. Yes, we’re using the code from the master branch.

There are four different tuners available:

The scikit-learn Tuner is a bit special. It doesn’t implement any algorithm for searching Hyperparameters. It rather relies on existing strategies to tune scikit-learn models.

How can we use Keras Tuner to find good parameters?

Random Search

Let’s start with a complete example of how we can tune a model using Random Search:

1def tune_optimizer_model(hp):2 model = keras.Sequential()3 model.add(keras.layers.Dense(4 units=18,5 activation="relu",6 input_shape=[X_train.shape[1]]7 ))89 model.add(keras.layers.Dense(1, activation='sigmoid'))1011 optimizer = hp.Choice('optimizer', ['adam', 'sgd', 'rmsprop'])1213 model.compile(14 optimizer=optimizer,15 loss = 'binary_crossentropy',16 metrics = ['accuracy'])17 return modelEverything here should look familiar except for the way we’re choosing an Optimizer. We register a Hyperparameter with the name of optimizer and the available options. The next step is to create a Tuner:

1MAX_TRIALS = 202EXECUTIONS_PER_TRIAL = 534tuner = RandomSearch(5 tune_optimizer_model,6 objective='val_accuracy',7 max_trials=MAX_TRIALS,8 executions_per_trial=EXECUTIONS_PER_TRIAL,9 directory='test_dir',10 project_name='tune_optimizer',11 seed=RANDOM_SEED12)The Tuner needs a pointer to the model building function, what objective should optimize for (validation accuracy), and how many model configurations to test at most. The other config settings are rather self-explanatory.

We can get a summary of the different parameter values from our Tuner:

1tuner.search_space_summary()1Search space summary2|-Default search space size: 13optimizer (Choice)4|-default: adam5|-ordered: False6|-values: ['adam', 'sgd', 'rmsprop']Finally, we can start the search:

1TRAIN_EPOCHS = 2023tuner.search(x=X_train,4 y=y_train,5 epochs=TRAIN_EPOCHS,6 validation_data=(X_test, y_test))The search process saves the trials for later analysis/reuse. Keras Tunes makes it easy to obtain previous results and load the best model found so far.

You can get a summary of the results:

1tuner.results_summary()1Results summary2|-Results in test_dir/tune_optimizer3|-Showing 10 best trials4|-Objective: Objective(name='val_accuracy', direction='max') Score: 0.75195533037185675|-Objective: Objective(name='val_accuracy', direction='max') Score: 0.74301671981811526|-Objective: Objective(name='val_accuracy', direction='max') Score: 0.7273743152618408That’s not helpful since we can’t get the actual values of the Hyperparameters. Follow this issue for resolution of this.

Luckily, we can obtain the Hyperparameter values like so:

1tuner.oracle.get_best_trials(num_trials=1)[0].hyperparameters.values1{'optimizer': 'adam'}Even better, we can get the best performing model:

1best_model = tuner.get_best_models()[0]Ok, choosing an Optimizer looks easy enough. What else can we tune?

Learning rate and Momentum

The following examples use the same RandomSearch settings. We’ll change the model building function.

Two of the most important parameters for your Optimizer are the Learning rate and Momentum. Let’s try to find good values for those:

1def tune_rl_momentum_model(hp):2 model = keras.Sequential()3 model.add(keras.layers.Dense(4 units=18,5 activation="relu",6 input_shape=[X_train.shape[1]]7 ))89 model.add(keras.layers.Dense(1, activation='sigmoid'))1011 lr = hp.Choice('learning_rate', [1e-2, 1e-3, 1e-4])12 momentum = hp.Choice('momentum', [0.0, 0.2, 0.4, 0.6, 0.8, 0.9])1314 model.compile(15 optimizer=keras.optimizers.SGD(lr, momentum=momentum),16 loss = 'binary_crossentropy',17 metrics = ['accuracy'])18 return modelThe procedure is pretty identical to the one we’ve used before. Here are the results:

1{'learning_rate': 0.01, 'momentum': 0.4}Number of parameters

We can also try to find better value for the number of units in our hidden layer:

1def tune_neurons_model(hp):2 model = keras.Sequential()3 model.add(keras.layers.Dense(units=hp.Int('units',4 min_value=8,5 max_value=128,6 step=16),7 activation="relu",8 input_shape=[X_train.shape[1]]))910 model.add(keras.layers.Dense(1, activation='sigmoid'))1112 model.compile(13 optimizer="adam",14 loss = 'binary_crossentropy',15 metrics = ['accuracy'])16 return modelWe’re using a range of values for the number of parameters. The range is defined by a minimum, maximum and step value. The best number of units is:

1{'units': 72}Number of hidden layers

We can use Hyperparameter tuning for finding a better architecture for our model. Keras Tuner allows us to use regular Python for loops to do that:

1def tune_layers_model(hp):2 model = keras.Sequential()34 model.add(keras.layers.Dense(units=128,5 activation="relu",6 input_shape=[X_train.shape[1]]))78 for i in range(hp.Int('num_layers', 1, 6)):9 model.add(keras.layers.Dense(units=hp.Int('units_' + str(i),10 min_value=8,11 max_value=64,12 step=8),13 activation='relu'))1415 model.add(keras.layers.Dense(1, activation='sigmoid'))1617 model.compile(18 optimizer="adam",19 loss = 'binary_crossentropy',20 metrics = ['accuracy'])21 return modelNote that we still test a different number of units for each layer. There is a requirement that each Hyperparameter name should be unique. We get:

1{'num_layers': 2,2 'units_0': 32,3 'units_1': 24,4 'units_2': 64,5 'units_3': 8,6 'units_4': 48,7 'units_5': 64}Not that informative. Well, you can still get the best model and run with it.

Activation function

You can try out different activation functions like so:

1def tune_act_model(hp):2 model = keras.Sequential()34 activation = hp.Choice('activation',5 [6 'softmax',7 'softplus',8 'softsign',9 'relu',10 'tanh',11 'sigmoid',12 'hard_sigmoid',13 'linear'14 ])1516 model.add(keras.layers.Dense(units=32,17 activation=activation,18 input_shape=[X_train.shape[1]]))1920 model.add(keras.layers.Dense(1, activation='sigmoid'))2122 model.compile(23 optimizer="adam",24 loss = 'binary_crossentropy',25 metrics = ['accuracy'])26 return modelSurprisingly we obtain the following result:

1{'activation': 'linear'}Dropout rate

Dropout is a frequently used Regularization technique. Let’s try different rates:

1def tune_dropout_model(hp):2 model = keras.Sequential()34 drop_rate = hp.Choice('drop_rate',5 [6 0.0,7 0.1,8 0.2,9 0.3,10 0.4,11 0.5,12 0.6,13 0.7,14 0.8,15 0.916 ])1718 model.add(keras.layers.Dense(units=32,19 activation="relu",20 input_shape=[X_train.shape[1]]))21 model.add(keras.layers.Dropout(rate=drop_rate))2223 model.add(keras.layers.Dense(1, activation='sigmoid'))2425 model.compile(26 optimizer="adam",27 loss = 'binary_crossentropy',28 metrics = ['accuracy'])29 return modelUnsurprisingly, our model is relatively small and don’t benefit from regularization:

1{'drop_rate': 0.0}Complete example

We’ve dabbled with the Keras Tuner API for a bit. Let’s have a look at a somewhat more realistic example:

1def tune_nn_model(hp):2 model = keras.Sequential()34 model.add(keras.layers.Dense(units=128,5 activation="relu",6 input_shape=[X_train.shape[1]]))78 for i in range(hp.Int('num_layers', 1, 6)):9 units = hp.Int(10 'units_' + str(i),11 min_value=8,12 max_value=64,13 step=814 )15 model.add(keras.layers.Dense(units=units, activation='relu'))16 drop_rate = hp.Choice('drop_rate_' + str(i),17 [18 0.0, 0.1, 0.2, 0.3, 0.4,19 0.5, 0.6, 0.7, 0.8, 0.920 ])21 model.add(keras.layers.Dropout(rate=drop_rate))2223 model.add(keras.layers.Dense(1, activation='sigmoid'))2425 model.compile(26 optimizer="adam",27 loss = 'binary_crossentropy',28 metrics = ['accuracy'])29 return modelYes, tuning parameters can complicate your code. One thing that might be helpful is to try and separate the possible Hyperparameter values from the code building code.

Bayesian Optimization

The Bayesian Tuner provides the same API as Random Search. In practice, this method should be as good (if not better) as the Grad student hyperparameter tuning method. Let’s have a look:

1b_tuner = BayesianOptimization(2 tune_nn_model,3 objective='val_accuracy',4 max_trials=MAX_TRIALS,5 executions_per_trial=EXECUTIONS_PER_TRIAL,6 directory='test_dir',7 project_name='b_tune_nn',8 seed=RANDOM_SEED9)This method might try out significantly fewer parameters than Random Search, but this is highly problem dependent. I would recommend using this Tuner for most practical problems.

scikit-learn model tuning

Despite its name, Keras Tuner allows you to tune scikit-learn models too! Let’s try it out on a RandomForestClassifier:

1import kerastuner as kt2from sklearn import ensemble3from sklearn import metrics4from sklearn import datasets5from sklearn import model_selection67def build_tree_model(hp):8 return ensemble.RandomForestClassifier(9 n_estimators=hp.Int('n_estimators', 10, 80, step=5),10 max_depth=hp.Int('max_depth', 3, 10, step=1),11 max_features=hp.Choice('max_features', ['auto', 'sqrt', 'log2'])12 )We’ll tune the number of trees in the forest (n_estimators), the maximum depth of the trees (max_depth), and the number of features to consider when choosing the best split (max_features).

The Tuner expects an optimization strategy (Oracle). We’ll use Baysian Optimization:

1sk_tuner = kt.tuners.Sklearn(2 oracle=kt.oracles.BayesianOptimization(3 objective=kt.Objective('score', 'max'),4 max_trials=MAX_TRIALS,5 seed=RANDOM_SEED6 ),7 hypermodel=build_tree_model,8 scoring=metrics.make_scorer(metrics.accuracy_score),9 cv=model_selection.StratifiedKFold(5),10 directory='test_dir',11 project_name='tune_rf'12)The rest of the API is identical:

1sk_tuner.search(X_train.values, y_train.values)The best parameter values are:

1sk_tuner.oracle.get_best_trials(num_trials=1)[0].hyperparameters.values1{'max_depth': 4, 'max_features': 'sqrt', 'n_estimators': 60}Conclusion

There you have it. You now know how to search for good Hyperparameters for Keras and scikit-learn models.

Remember the three requirements that need to be in place before starting the search:

- You have intimate knowledge of your data

- You have an end-to-end framework/skeleton for running experiments

- You have a systematic way to record and check the results of the searches

Keras Tuner can help you with the last step.

Run the complete code in your browser

References

Share

Want to be a Machine Learning expert?

You'll never get spam from me